ISSN: 0970-938X (Print) | 0976-1683 (Electronic)

Biomedical Research

An International Journal of Medical Sciences

Review Article - Biomedical Research (2018) Volume 29, Issue 6

A review-classification of electrooculogram based human computer interfaces

S. Ramkumar*, K. Sathesh Kumar, T. Dhiliphan Rajkumar, M. Ilayaraja and K. Shankar

School of Computing, Kalasalingam Academy of Research and Education, Krishnankoil, Virudhunagar (Dt), Tamilnadu, India

- *Corresponding Author:

- S Ramkumar

Department of Computer Applications

Kalasalingam Academy of Research and Education, India

Accepted date: November 08, 2017

DOI: 10.4066/biomedicalresearch.29-17-2979

Visit for more related articles at Biomedical ResearchToday there is an increasing need for assistive technology to help people with disabilities to attain some level of autonomy in terms of communication and movement. People with disabilities, especially total paralysis is often unable to use the biological communication channels such as voice and gestures hence digital communication channels are required. Research on Human Computer Interaction (HCI) is striving to help such individuals to convert human intentions into control signals to operate devices. The technique of measuring cornea retinal potential associated with eye movement is called Electrooculography. Eye movements are behaviors that can be measured and their measurements provide the sensitive means of learning about cognitive and visual stimuli. A human eye conveys a great deal of information with regards to the direction of the eye movements. Further, the direction in which an individual is looking shows where his or her attention is focused. Eye movements are naturally divided into three categories one is the saccades in which eyes quickly change the point of fixation and another is a smooth pursuit movement in which eyes to closely follow a moving object at a steady coordinated velocity, rather than in saccades and the other is a vergence movement in which eye rotates in the opposite direction. By tracking the position of the eye movement useful interfaces can be developed that permit the user to commune and control in a more general way. This paper convey some basic idea about various feature extraction techniques and classification techniques used to categorize the eye movement tasks and also it gives a vision on different issues associated in the field of Electrooculography based Human Computer Interface.

Keywords

Electrooculography, Human computer interaction, Neural network, Hidden markov model, Support vector machine, Clustering and fuzzy logic classification

Introduction

Communication is one of the necessities for a human being to interact with the society [1]. People with disabilities are complicated to recognize quality of life due to their inability. In the modern world elderly disabled people were increasing daily for that reason the development of a control device that is friendly to disabled was more essential. A specialized reserve method to commune without verbal communication and body movements is important to enhance the eminence of life for the disabled without their care takers. An eye controlled communication interfaces using voluntary movement have been urbanized for immobilized individuals who have motor neuron diseases. Assistive robots provide an effective communication channel to improve the impact of their limitations, by compensating for their specific impairments. Human Computer Interaction system has become an increasingly important part of our daily life to overcome such incapability [2,3]. HCI is the study of the contact among person and machine to detect the exact activity patterns and translates these patterns into meaningful instructions [4]. The basic objectives of HCI are trendy to make machine more practical and more accessible to the user’s requirements. To offer the best possible interface within given constraints, the HCI designers are invented to develop systems that reduce the obstacle among the human’s cognitive model of what users want to attain and the computer’s perceptive of the user's events [5].

In recent years EOG signals are used to manage signal for different HCI function such as mobile robot control [6], mouse cursor control [7], tooth-click controller [8], hospital alarm system [9], brain-computer interface [10], Mouse controller using an infrared head-operated joystick [11] and electrical mobile wheelchair control [12]. This study focuses on the achievability of identifying better and suitable classification algorithm for classifying different eye movement tasks for designing EOG based HCI systems. Paper has been prearranged in the form of: Section II of the paper discussed about the EOG and Section III of the paper explains extra ocular muscles and electrode placement details while section IV elaborate the methodology for signal acquisition and Section V reviewed different types of classification techniques present in the earlier study for EOG based HCI. Finally, conclusions are explained in section VI.

Electrooculography

Measuring the potential variation among a cornea and retina is called Electooculogram. EOG signals were frequently falling and stuck between 50 to 3500 μV with a frequency range in between 0 to 100 Hz between the frontage and backside of the eye. In this event eye work as an electrical dipole between the frontage and the backside. Any regular change of the eye movement makes a change in the direction of the positive and negative pulse to the dipole can be measured. During the time linear behavior for gaze angles caught between ± 500 for horizontal eye movement and ± 300 for vertical eye movement and 20 μV changes are seen for changes in each level of eye activity [13,14].

Extraocular Muscles and Electrode Placement

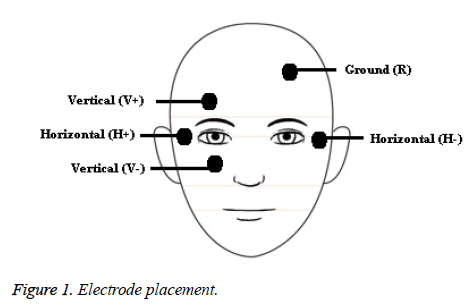

Six muscles presented in human eye were responsible for controlling the activities of eye movements and eye positions are called extra-ocular muscles. The following six muscles and activities performed by individual muscles were examined in the Table 1. To measure the elevate and depress of the eye movements electrodes was placed near to the human eye to record vertical and horizontal eye movement using four electrodes and one electrode act as the ground and placed on the forehead as shown in Figure 1.

| Extra-ocular muscles | Activities performed by muscles |

|---|---|

| Medial Rectus (MR) | Shift the eye inward and near to the nose. |

| Lateral Rectus (LR) | Moves eye outward and away from the nose. |

| Superior Rectus (SR) | Rotates the eye upward and top of the eye |

| Inferior Rectus (IR) | Revolve eye downward and away from the nose. |

| Superior Oblique (SO) | Turn around top of the eye near the nose and transfer eye outward |

| Inferior Oblique (IO) | Spin the top of the eye away from the nose, eye upward and eye outward. |

Table 1. Extra-ocular muscles activity.

Methodology

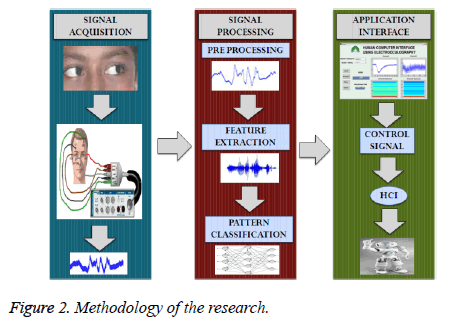

EOG signals obtained from the human subjects for different eye movement tasks were converted to a digital signal by AD Power Lab instrument (T26) bio amplifier. Digital signals were further preprocessed to remove the contaminated signals present in the acquired signals. The obtained signals were applied to pre-processing and feature extraction techniques to take out the prominent features from entire band. The important feature sets obtained from the feature extraction methods are individually applied to neural networks to classify the signals into different eye movement pattern signals. Different pattern signals from the graphical user interface are applied as a control signal to control the hardware. A complete methodology of electrooculogram based human computer interface research is demonstrated in Figure 2.

Background

Human Computer Interface with the help of on Electrooculogram has been efficiently and broadly applicable in developing biomedical devices to fulfill the basic needs without any care takers. A lot of well-organized HCI using EOG has been created for disabled person. This paper gave an idea about few of the vital studies using EOG based HCI developed for an immobilized person they are.

Using neural network classification techniques

Barea et al. analyzed an innovative system to control and direct a mobile robot. To sense saccadic eye movements and fixations a Radial Basis Function was used in this study. To decrease the shifting resting potential changes with time, high pass AC differential amplifier with a cutoff frequency at 0.05 Hz and gaining ranges were varied from 500, 1000, 2000, and 5000. RBF with one hidden layer was used to identify the person focus. Identified control signal was applied as commands to control an electric wheelchair by generating a variety of EOG based eye movements. This study shows that disable people regularly entail about 15 min learning to use this system conveniently [15,16].

Barea et al. introduced eye controlled wheelchair using dimensional bipolar to recognize four states like forward, backward, right and left movements of the wheelchair by using five floating metal body surface electrode systems. Salient features of this study were its modularity, making it supple to individual needs of each user according to the type and degree of handicap involved. The well-designed blocks of this system were powered and motion controllers, human machine interface, environment perception, and navigation and sensor integration. This study confirmed that EOG signal varies according to the eye gaze movement and this variation can be easily interpreted to angles using amplifier used in this study. AC differential amplifier with a gain range of 1000 to 5000 with a high pass filter with a cutoff frequency at 0.05 Hz and low pass filter with cutoff frequency at 35 Hz to eliminate the shifting resting potential changes. Outcome shows that users need 10-15 min to use this system effectively [17].

Sherenby and Badawy initiated a new method for sensing the looking point of eye gaze signals resulted from human eye’s saccadic motion using EOG to control a number of appliances to facilitate handicapped personnel by using devices like eye mouse and eye document reader. Five silver chloride floating metal body surface electrodes are used in this research. Signals are acquired from five subjects. To amplify the acquired signals from subject differential amplifier was set with a range of 1000 to 5000 gain value. Signals were preprocessed with high pass filter with cut-off frequency 0.1 Hz and low pass filter with cutoff frequency at 100 Hz to remove external artifacts using LAB VIEW software. Acquired signals were integrated with three integration methods, namely, curved, trapezoidal rule and Mid-ordinate rule to work in real time. From the three methods multipoint detection procedure was applied to take out the exact features. The features were trained and tested using neural network classifiers to notice the different eye positions. The three above methods used in this work gave sensible results. But multipoint detection techniques results are the best among the remaining techniques used in this study because it capture out eye movement and controls the computer interface application. At last, four different software and hardware applications were modeled to assist handicapped community [18].

Guven and Kara proposed to distinguish between normal and subnormal eye in the diagnosis of eye disease to assist physicians using a feed forward neural network to classify the EOG signal obtained from 32 normal and 40 subnormal persons using Tomey Primus 2.5 for saccades in dark and bright light for one minute interval. Features are extracted by comparing the amplitude recorded from dark and bright light. The distinctions between two signals collected from all patients were used as input of the ANN. FFNN using back propagation algorithm with a Levenberg Marquart Backpropagation algorithm was implemented in this study to identify the different movements. The designed classification showed that 93.3% specificity and 94.1% sensitivity with a positive prediction of 94.1% [19].

Banerjee et al. suggested a new approach to develop rehabilitation electrical devices using eye movement signals acquired from five subjects (2 M and 3 F) between the age group of 23 to 25 using three electrode system to help severely paralyzed persons. Acquired signals were preprocessed using a band pass filter with in a range of 1 Hz to 15 Hz to extract useful features using wavelet transform. The features obtained are then classified according to the direction of eyeball movement using radial basis function. Experimental results shows that the recognition rate of 77.36% was achieved using RBF for both right and left movements [20].

Ramkumar et al. developed HCI with the help of neural network using eye movements and electrooculography. Singular Value and band power algorithm were used to extract the features from different eye movements. FFNN and ERNN methods were used to classify the acquired signals. Result depict that average classification efficiency varies from 83.36% and 98.50% for SVD features and 84.60% and 98.46% for band power features using FFNN and ERNN correspondingly. This study proves that dynamic network using band power features marginally outperforms the static network classifier respectively [21].

Ramkumar et al. predicted eleven eye movements collected from twenty subjects using electrooculography and dynamic neural networks. Parseval and Plancherel techniques with neural network were used to identify the eye movements. Result observed from this study is compared with a feed forward network. This study proves time delay neural network marginally outperforms the FFNN with an average classification accuracy of 91.40% and 90.89% for the extracted features [22].

Hema et al. applied new techniques for classifying eleven different eye movement signals obtained from twenty subjects between the age group of 19 to 45 using five electrode systems to help severely paralyzed persons to develop a human computer interface using static and dynamic networks. Acquired signals are preprocessed using a notch filter with cut off frequencies 1 Hz and 50 Hz to extract useful features using Convolution and Singular Value Decomposition techniques. TDNN and FFNN were used to train the extracted features. Recognizing accuracies varies from 90.99% and 90.10% for convolution features and 90.88% and 89.92% for SVD features using TDNN and FFNN. From the experimental results it was observed that Convolution features using the time delay neural network is more suitable for designing nine states HCI for eleven different eye movement tasks respectively [23].

Ramkumar et al. proposed new model for recognizing eleven different eye movement tasks from ten subjects. In this paper novel Parseval Theorem was applied to extract the prominent features. FFNN and TDNN were used to classify the different eye movements. Identification accuracies were differing from 80.72% to 91.48% and 85.11% to 94.18% of the eleven different eye movement tasks to develop nine states HCI. Result observed from the study proved that TDNN with parseval features were more suitable for designing nine states HCI systems for elderly disabled [24].

Ramkumar et al. predicted new idea to control the hardware using Electrooculographgy and HCI. Many studies were spotlighting the conventional methods to develop two, three and four states HCI. This paper highlights the multi-state HCI using nine states from eleven different eye movements. Eye movements like rapid movement and lateral movement were used in this study to develop the HCI. Dynamic neural network like LRNN was used to identify the signals from twenty subjects. The result obtained from this study confirms that modeling the multistate HCI using nine states were possible [25].

Using hidden markov model classification technique

Kim et al. designed an unconventional human interface system for facilitating community with severe motor disabilities to direct a wheelchair. Signals were acquired from the four tasks using four electrode systems. Five subjects were participated in the experiment range from 25 to 52 years in age. All the subjects have a severe loss of motor function caused by traffic or hiking accidents. Acquired signals were amplified by ADS8343 to extract the features using linear prediction coefficients and entropy techniques. For estimating timevarying linear prediction coefficient parameters, a signal of 0.5 s, sampled at a rate of 512 Hz, were first divided into short segments, generally called frames, and linear prediction coefficients are then obtained from individual outline. Hidden Markov Model was used to classify the four different task signals. The control signals were applied to the digital signal processor kit (TMS320-C6711B) to validate and test the wheelchair for conventional four directions. The total task classification and identification rate occurring in the five subjects was around 97.2% [26].

Fang et al. have developed well-organized communiqué medium for handicapped, especially who cannot move the muscles of the body except eyes using EOG to control speech synthesizer based on T3 WFST decoder which supports live decoding at the end of the input. Data were composed of five different tasks from two subjects with six electrode system using six channels. A speech identification method using HMM was proposed to recognize the eye activity. Investigation result proved that 96.1% of identification, precision for five classes of eye events and also EOG signals were subject oriented and it will vary from subject to subject [27].

Using movement classifier deterministic finite automata classification technique

Trikha et al. introduced a new technique for classifying sixteen different eye movement signals collected from five subjects using five silicon electrode systems for both horizontal and vertical eye movements. Obtained signals were preprocessed and de-noised to eliminate all unnecessary frequencies by using comparator and NOR gate network. These pre-processed data were supply as major input to the Peak Detection Deterministic Finite Automata (PDDFA) to recognize positive/ negative peaks and rest zone in EOG. The output of PDDFA was mapped onto Movement Classifier Deterministic Finite Automata (MCDFA) to identify the eye movement activity performed by the individual. This control signal was applied to control medical instrumentation devices [28].

Khan et al. proposed a new design to manage the robotic arm movement with the help of human eyes using EOG signals acquired from 5 subjects. Acquired signals were amplified by INA126P instrumentation amplifier and preprocessed using a low pass filter with cutoff frequencies at 47 Hz and applied to PDDFA technique to identify positive or negative peaks and rest zone. The output of PDDFA was used as input by the Movement Classifier Deterministic Finite Automata (MCDFA) to conclude the eye movement performed by the user. Communication between computer and microcontroller were managed by using Serial port interface to produce the necessary square wave for driving the servo motors to control the arm. This work identifies the necessities of the subject and aims in support to move the object just by eyeball or by blinking the eyes with a response time of 100 ms with high sensitivity [29].

Postelnicu et al. execute an EOG-based interface for HCI to control keyboard, mouse, joystick and EOG. Signals were collected from fourteen individuals aged from 23 to 30 using five electrode systems for the tasks, namely blink, winks and fixations. Acquired signals were preprocessed by band-pass filter in the range of 0.05 Hz and 30 Hz by using a predefined low-pass filter with a cut-off frequency at 30 Hz and a highpass filter with cut-off frequency at 0.05 Hz. An additional 50 Hz notch filter was applied to suppress line noise and applied to peak amplitude algorithm to extract the features for both horizontal and vertical eye movements. Individual user has unique peak amplitudes for each task. The classification were defined by two techniques based on fuzzy logic and DFA. For each individual, the task completion time and the number of commands used for each test were calculated. The system illustrates an accuracy of 95.63% and specificity of 93.65% with sensitivity of 97.31% [30].

Using clustering and fuzzy logic classification technique

Tsai et al. focuses on novel communication method for eye writing system using Two INA218 differentia1 amplifiers, one for vertical movement and other for horizontal movement to amplify the signals whose gain is two thousand and sampled at 1000 per second digital signals received by computers. EOG signals collected from DAQPad 6020E from eleven individuals aged from 20 to 28 with the help of five electrode systems. Obtained signals are preprocessed with notch filter with 60 Hz to remove power line defects, especially during recording time and applied to baseline drift and remove the exposed cross talk to extract the genuine waveform. Clustering technique is used to classify the EOG signals to recognize the ten Arabic numerals and four mathematical operations and attained 95% accuracy compared to other systems. Experimental results proved that the identification rate ranging from 50% to 100% [31].

Rezazadeh et al. develop an innovative HCI for handicapped inhabitants to cooperate with assistive systems for an enhanced quality of life using EOG signals acquired from ten subjects using six electrode systems with the help of Biopac system. The collected data were passed through parallel Butterworth digital filter banks with a fixed frequency range of (0.2-3 for FEOG, 7-12 Hz and 13-22 Hz for FEEG, 30-450 Hz for FEMG) to obtain the desired frequency to determine and improve the physical level of communication on the subject. The root mean square technique within non-overlapping 256 ms window was used to extract the features. The subtractive fuzzy c-means clustering method (SFCM) was applied to slice the feature space and make initial fuzzy based Takagi-Sugeno rules. The average classifier discriminating ratio for eight different facial gestures is between 93.04% and 96.99%, according to different groupings and fusions of logical features. Experimental results validate that the proposed interface has a high degree of accuracy and robustness of discrimination of eight primary facial gestures [32].

Vandhana and Prabhu present an inventive measurement system for eye tracking and recognize the eye movement for the patients using EOG signals collected from the four tasks, namely, right, left, up and down using five electrode system collected from five subjects. Acquired signals were amplified using the signal conditioning amplifier and applied to wavelet transform to extract the features. The fuzzy logic technique was used to classify signal into four different EOG patterns generated by the human subjects. Threshold varies for each and every eye movement from 0.1609, 0.1596, 0.1152, 0.1209 for right, left, up, and down using Wavelet transform and 0.1152, 0.1209, 0.1596 and 0.1609 for fuzzy logic. The control signal can be given on the MSP430 controller, which was realized and used to control the electrical devices [33].

Using linear discriminant analysis classification technique

Kuo et al. designed a sophisticated EOG-based Sleep monitoring System, which can be helpful for a sleep disorder person. Signals were collected from wearable wireless glass sensor for five sleeping stages with a sampling rate of 256 Hz for sixteen subjects. Butterworth band-pass filter with a range of 0.5-30 Hz pass-band was used to filter the recordings for artifact elimination, improvement of sleep-related physiological activities. Multi-scale entropy (MSE), Autoregressive Model and Multi-scale line length (MLL) has been used to extract features from the filtered signals. LDA was used to classify five sleep stages based on the extracted MSE values, AR coefficients and MLL values. The modern technique was established to improve the result of 83% accuracy when compared with the manual scorings applied to sixteen subjects. The research proved that the EOG-based sleep scoring system is a feasible solution for home-use sleep monitoring due to monitoring the accurate sleep stages [34].

Wissel and Palaniappan designed Human-Computer Interfaces based on Electrooculogram signal acquired from five subjects using five electrode systems for developing a virtual keyboard. EOG signals were sampled with 256 Hz, Acquired signals were applied to Euclidean distance, FFT and wavelet decomposition for extracting important features. ANN and LDA method is used to classify the EOG signals. The experiment was conducted for six tasks, namely right, left, up, down and long blink and short blink with an average accuracy of 94.90% using DWT and LDA [35].

Using nearest neighborhood classification technique

Usakli et al. developed Electrooculography based HCI to operate a virtual keyboard. Signals were acquired from ten subjects for four tasks, namely right, left, up and down using an instrumentation amplifier (LT1167) with a sampling rate of 176 Hz. To reject high frequency noise a low pass filter with cutoff frequency of 0.15-31 Hz is fixed to eliminate the power line noise during preprocessed stage. The Euclidean distance technique was used to extract the features. The Nearest Neighborhood algorithm was used to categorize the EOG signals into different patterns. The accuracy of the EOG system is comparatively good, because 5 letters word can be printed by the disabled with on average of 25 s and 105 s with EEG based device with an accuracy of 95% was achieved [36].

Barcia developed an eye controlled joystick using EOG signal acquired from twenty subjects for up, up-left, left, down-left, down, down-right, right, up-right and blink task using five electrode systems. The cutoff frequency was initially set for 0.1 Hz with a maximum linear gain of 60 Hz. Obtained signals were preprocessed to eliminate the power line defects using notch filter with a cutoff frequency of 50 Hz. The Euclidean distance technique was applied to extract the features from twenty subjects using nine tasks. KNN algorithm was used to classify the extracted features. The proposed classifier shows that average classification accuracy of 91.11% for nine eye movements [37].

Desai designed and developed a virtual keyboard for paralyzed people who cannot speak using EOG signals collected from ten subjects with five electrode systems. IC INA128 instrumentation amplifier was used to amplify the signals for both horizontal and vertical eye movements and sampled at 176 Hz. Acquired signals are preprocessed with a Butterworth filter with cutoff frequency of 0.01 to 50 Hz to extract the EOG signals. The nearest neighborhood algorithm was used to categorize the signals, and the classification performance is 95%. The new EOG-based HCI system allows the disabled community to effectively and inexpensively commune with their surroundings by using only their eye movements [38].

Using support vector machine classification technique

Bulling et al. examined eye-based HCI by using wearable EOG glasses through EOG system. Signals acquired from eight subjects with five electrode systems for five tasks, namely copying a text, reading a printed paper, taking handwritten notes, watching video and browse the web using the Mobi8 EOG device at a sampling rate of 128 Hz. Acquired signals were preprocessed using median filter with a cutoff frequency of 0 Hz to 30 Hz, which removes pulses of a width smaller than about half of its window size, motion artifact compensation by using adaptive filters, blink detection using template matching technique, saccade detection using CWTSaccade Detection. Features were extracted using minimum redundancy maximum relevance technique. Support Vector Machine technique is applied to categorize the signals. The mean performance of overall applicant was 76.1% precision and 70.5% recall is achieved by using the SVM classifier [39].

Sun et al. developed bio-based human computer interface for assistive robot using EOG signal acquired from four (3 M, 1 F) subject using five electrode system. Signals were sampled at 1000 Hz with an NT9200 AC-EOG signal amplifier and stored at 16-bit integers. From this relevant features were extracted by using multiple feature extraction techniques, namely entropy, spatial entropy, and peak-to-valley ratio. The back-propagation (BP) neural network, classification support vector machine (CSVM), and particle swarm optimization-SVM have been used to classify four kinds of eye movement signals namely saccade, blink once, and blink twice signals. Finally the back propagation and C-SVM classifiers yield the same classification accuracy rate of approximately 96%, but the hybrid PSO-SVM obtains the highest classification accuracy rate of 98.71% and exhibits more stable classification performance than the back propagation network [40].

Nakanishi et al. identify voluntary eye blink detection technique using electrooculogram for controlling brain computer interface using the four tasks. EOG signals were collected from eight subjects using five electrode systems. Acquired EOG signals were first preprocessed to extract 0.53-15 Hz signals using a FIR filter. Preprocessed signals are applied to vertical peak amplitude, horizontal peak amplitude and maximum cross correlation techniques to extract the features. Support Vector Machine technique was used to classify the feature extracted EOG signals. An average simulation accurateness of 97.28% was obtained by using this technique. In addition, compared to all individual tasks wink task outperforms other tasks with an accuracy of 97.69% [41].

Conclusion

Human activity detection has grown to be an important application area for pattern recognition. In this paper we, inspect eye movement study as an innovative sensing modality for activity identification based on an EOG classification algorithm, for designing EOG-based HCI. With the help of EOG-based HCI, disabled people whose motor neurons controlling voluntary muscles are damaged can convey their intentions correctly to a certain extent. From the review, it can be found that development of HCI using different bio signals could help to progress the quality of life of the disabled persons. EOG signal is one of the prominent out of other bio signals having valuable information regarding nerve system. From the review it can be concluded that the neural network and SVM dominates the classification accuracies in designing EOG based HCI. At the same time it can be noted that there are a lot of EOG applications being developed, several devices with perfect output for definite troubles. Still essential algorithms and interaction similes require be developing and appraising to make important development in the communiqué with immobilized inhabitants with the help from eye movements only.

References

- Vamsi T, Mishra S, Shambi JS. EOG based text communication system for physically disabled and speech impaired, 2008.

- Cincotti F, Mattia D, Aloise F. Non-invasive brain computer interface system: towards its application as assistive technology, Proceed ICBR 2008.

- Lehtonen L. EEG-based brain computer interfaces. Helsinki University of Technology, 2002.

- Dix A, Finlay J, Abowd G, Beale R. Human-computer interaction, 3rd Ed. Prentice Hall, New York, 2003.

- Sharp H, Rogers Y, Preece J. Interaction design-Beyond human computer interaction. 2nd Ed, John Wiley & Sons, New York, 2007.

- Kim Y, Doh NL, Youm Y, Chung WK. Robust discrimination method of the electrooculogram signals for human-computer interaction controlling mobile robot. Procced ICIASC 2007.

- Norris G, Wilson E. The eye mouse, an eye communication device. IEEE Transact Biomed Eng 1997.

- Simpson T, Broughton C, Gauthier MJA, Prochazka A. Toothclick control of a hands-free computer interface. IEEE Transact Biomed Eng 20089; 55: 2050-2056.

- Venkataramanan S, Prabhat P, Choudhury SR, Nemade HB, Sahambi JS. Biomedical instrumentation based on electrooculogram (EOG) signal processing and application to a hospital alarm system. Proc 2nd ICISIP 2005.

- Panicker RC, Puthusserypady S, Sun Y. An asynchronous P300 BCI with SSVEP-based control state detection. IEEE Transact Biomed Eng 2011; 6: 1781-1788.

- Evans DG, Drew R, Blenkhorn P. Controlling mouse pointer position using an infrared head-operated joystick. IEEE Transact Rehab Eng 2000.

- Sandesh P, Sagar A, Romil K. Eye controlled wheelchair. Int J Sci Eng Res 2012; 3: 1-4.

- Ubeda A, Ianez E, Azorn M. Wireless and portable eog-based interface for assisting disabled people. IEEE/ASME Transact Mechatron 2011; 16: 870-873.

- Singh H, Singh J. A review on electrooculography. IJAET 2012; 3: 115-122.

- Hema CR, Paulraj MP, Ramkumar S. Classification of eye movements using electrooculography and neural networks. IJHCI 2014; 5: 51-63.

- Barea R, Boquete L, Mazo M, Lopez E, Bergasa LM. EOG guidance of a wheelchair using neural network. Proceed 15th ICPR 2000; 4: 668-671.

- Barea R, Boquete L, Mazo M, Lopez E. System for assisted mobility using eye movements based on electrooculography. IEEE Transact NSRE 2002.

- Sherbeny AS, Badawy S. Eye computer interface (ECI) and human machine interface applications to help handicapped persons. TOJEEE 2006; 5: 549-553.

- Guven A, Kara S. Classification of electro-oculogram signals using artificial neural network. ESA 2006; 31: 199-205.

- Banerjee A, Datta S, Konar A, Tberwala DN. Development strategy of eye movement controlled rehabilitation aid using electrooculogram. IJSER 2012; 3: 1-7.

- Ramkumar S, Hema CR. Recognition of eye movementelectrooculogram signalsusing dynamic neuralnetworks. KJCS 2013; 6: 12-20.

- Hema CR, Paulraj MP, Ramkumar S. Classification of eye movements using electrooculography and neural networks. IJHCI 2014; 5: 51-63.

- Hema CR, Ramkumar S, Paulraj MP. Idendifying eye movements using neural networks for human computer interaction. IJCA 2014; 105: 18-26.

- Ramkumar S, SatheshKumar K, Emayavaramban G. EOG signal classification using neural network for human computer interaction. IJCTA 2016; 2: 1-11.

- Ramkumar S, SatheshKumar K, Emayavaramban G. Nine states HCI using electrooculogram and neural networks. IJET 2017; 8: 3056-3064.

- Kim KH, Kim HK, Kim J, Son W, Lee SY. A biosignal-based human interface controlling a power-wheelchair for people with motor disabilities. ETRIJ 2006; 28: 111-114.

- Fang F, Shinozaki T, Horiuchi Y, Kuroiwa S. HMM based continuous EOG recognition for eye-input speech interface. Proceed Int Speech Commun Assoc 2012.

- Trikha M, Bhandari A, Gandhi T. Electrooculogram classification of microcontroller based interface system. SIEDS 2007.

- Khan A, Memon MA, Jat Y, Khan A. Electro occulogram based interactive robotic arm interface for partially paralytic patients. IJITEE 2012.

- Postelnicu C, Girbacia F, Talaba D. EOG-based visual navigation interface development. ESA 2012; 39: 10857-10866.

- Tsai JZ, Lee CK, Wu CM, Wu JJ, Kao SKP. A feasibility study of an eye-writing system based on electro-oculography. JMBE 2008; 28: 39-46.

- Rezazadeh IM, Firoozabadi SM, Hashemi R, Golpayegani SM. A novel human-machine interface based on recognition of multi-channel facial bioelectric signals. APESM 2011; 34: 497-513.

- Vandhana P. A novel efficient human computer interface using an electrooculogram. IJRET 2014; 3: 799-803.

- Kuo C. An EOG-based sleep monitoring system and its application on on-line sleep-stage sensitive light control. Proceed Int Conf Physiol Compute Syst 2014; 1: 20-30.

- Palaniappan R. Considerations on strategies to improve EOG signal analysis. ACM IJALR 2011; 2: 6-21.

- Usakli AB, Gurkan S, Aloise F, Vecchiato G, Babiloni F. On the use of electrooculogram for efficient human computer interfaces. CIN 2010.

- Barcia JC. Human electrooculography interface. Lisbon Technical University 2010.

- Desai YS. Natural eye movement & its application for paralyzed patients. IJETT 2013; 4: 679-686.

- Bulling A, Gellerson H, Troster G. Eye movement analysis for activity recognition using electrooculographgy. IEEE TPAMI 2010.

- Sun L, Wang S, Zhang J, Xiao H. Research on electrooculography classification based on multiple features. IJDCTA 2012; 6: 35-42.

- Nakanishi M, Mitsukura Y, Wang Y, Wang T, Jung P. Online voluntary eye blink detection using electrooculogram. Int Symp Nonlinear Theor Appl 2012; 114-117.