ISSN: 0970-938X (Print) | 0976-1683 (Electronic)

Biomedical Research

An International Journal of Medical Sciences

Research Article - Biomedical Research (2018) Volume 29, Issue 8

A non-invasive tongue controlled wireless assistive device for quadriplegics

VIT University, Vellore, Tamil Nadu, India

Accepted date: February 15, 2018

DOI: 10.4066/biomedicalresearch.29-17-1620

Visit for more related articles at Biomedical ResearchIndividuals with spinal cord injuries, particularly quadriplegic patients, are carrying on with an exceptionally difficult life. There arises a need to develop an assistive innovation which can ease the difficulty faced by quadriplegic patients in this circumstance. There are a few advancements intended to help quadriplegic patients face their challenges. This project proposes one of those advancements, a noninvasive tongue controlled wireless assistive device which possesses a head mounted IR sensor set reciprocally on either side of the cheeks. This has been planned diplomatically for the easy use of the quadriplegic patients. Here the patient's tongue manoeuvring is distinguished by an ease intelligent Infrared sensor, which is then converted into commands and remotely transmitted to show on the LCD screen. The adjustment in reflection power of the sensor by the tongue movement enables to give several commands.

Keywords

Non-invasive, Infrared sensor, Assistive device, Tongue controlled, Tongue controlled assistive device (TCAD).

Introduction

There have been numerous advancements in the field of biomedical engineering over a past few decades. Approximately 250,000 to 500,000 people suffer from a spinal cord injury all around the world. According to the facts about 47% of these people suffer from quadriplegia. Among them 89% are sent to private homes from hospitals whereas about 4.3% of them are discharged to nursing home. The main causes of paralysis are stroke, neuromuscular disorders and spinal cord injuries. A number of assistive technologies have been developed out of which only a few of them are accepted worldwide. Assistive technology (AT) enables the patients to carry out their routine works on their own with greater ease and comfort. It helps the patients to perform activities like mobility, hearing, vision, self-care, safety etc. But the device needs to be highly reliable both in terms of safety and accurate operation for patients with various disabilities and should also be able to provide them sufficient degrees of freedom. There are a number of assistive devices for severely disabled people like tracking eye motion, chin/head and hand movements, utilizing brain waves, muscle electrical activity, voice commands but each of them have their own drawbacks and limitations because of which the user may not be able to depend on them completely. Thereby we need a device which provides an efficient solution as well as targets to minimize the discomfort caused to the patients.

Switch controlled assistive devices provide limited degrees of freedom. These devices prove to be an advantage for only to those groups of people whose head movements are not restricted. Thereby the limitation is that the patient’s head should confine within the range of the sensors. The alternative for this is thus a tongue based assistive technology.

Tongue and mouth both consists of almost the same amount of sensory and motor context as that of fingers and the hand. This provides an inherent capability of required motor control and manipulation tasks. Amyotrophic lateral sclerosis and stroke causes severe damage to spinal cord and brain, which causes severe disabilities to the patients. Patients with such brain disorders can only depend on available assistive technologies to lead a self-supportive life.

A patient connected to a wheeled mobility device or to a computer, has lesser independent mobility and potential communication. So a non-tethered control is much in demand compared to tethered, as this greatly improves the day to day quality of the user’s lifestyle.

There have been a lot of research on tongue controlled assistive devices and also numerous techniques to interface the tongue electrical contacts, induction coils, and magnetic sensors and so on. Struijk developed an inductive tongue computer interface using induction coils [1]. A ferromagnetic substance was fixed on the tongue along with an induction coil inside the mouth. The tongue, with ferromagnetic material, touches the coil in order to change the voltage. This change in voltage was used to produce the patient’s intention. But the main disadvantage of this device was that it caused many difficulties to users such as piercing or gluing the magnet on the tongue and also the induction coils inside the mouth. In addition to this, wires in a silicon tube were to be held in the mouth to carry the signal to the device which caused a lot of discomfort for the user. The disadvantage of using wires was eliminated by the introduction of wireless technology by Huo et al. [2]. They implemented the usage of magnetic induction for tracking tongue movement, based on which the output from the sensor varies. These changes were recorded as the user commands.

After significant studies, customary Tongue Drive Systems (TDS) proved to be more efficient than other ordinary assistive devices for Quadriplegics. Magnetic sensor placed on tongue alongside Hall-effect sensors transmits data remotely to PC. It is used by the disabled to lead an independent life. It gives quicker, better, and more convenient control when compared with most of the existing assistive advances [3]. TDS assessment was done on patients with abnormal state spinal cord injury (C1-C3). TDS detects specific movements of tongue and converts them into user commands. Detection is done by determining the position of a small permanent magnetic tracer placed on the patient’s tongue. The external TDS (eTDS) model is mounted on a wireless headphone and interfaced to a portable PC and a powered wheelchair (PWC) [4].

Thus the Tongue Drive System was able to provide minimally invasive and easy-to-use wearable device for people with the most severe physical disabilities along with improved aesthetic appearance [5]. For 87% effectively finished commands, TDS had a reaction time of 0.8 s, which produced an information transfer rate of approximately 130 bits/min [2]. Among one of the researches, in which the quadriplegics were given the task of controlling the PC with TDS, the quality of control by the quadriplegics was almost identical to the control by physically fit beings [6]. The intraoral TDS (iTDS) has replaced the previous model of TDS. This model has a completely hidden set, which contains a headset developed in the shape of intraoral retainer which is placed under the roof of the mouth. The magnetic sensors located at the four corners of the iTDS board read the magnetic field variations to produce user commands but along with other disadvantages it had the main disadvantage of user discomfort [7].

The limitations of iTDS lead to a new research of orthodontic base with five hall-effect sensors inside or outside the mouth which was to control a wheelchair and produce five different commands: forward, reverse, right, left and stop movement of the wheelchair [8]. Again piercing a magnet onto the tongue causes severe discomfort to the users along with some interference effects. Also, the current technology has the disadvantage of being partially or completely invasive which causes discomfort for the user. An efficient prediction of what the user wants to convey along with maximum comfort and ease are the main challenges faced in this area. This is when OTCAD plays a major role. Optical Tongue Controlled Assistive Device (OTCAD) supports paralyzed individuals with a wireless non-invasive innovation. It comprises of an IR reflective sensor composing of transmitter and receiver and is utilized for detecting the tongue movements [9-12].

Proposed Methodology

System overview

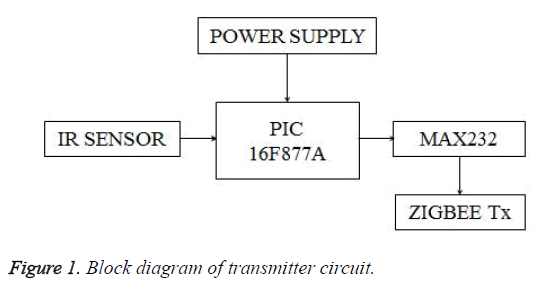

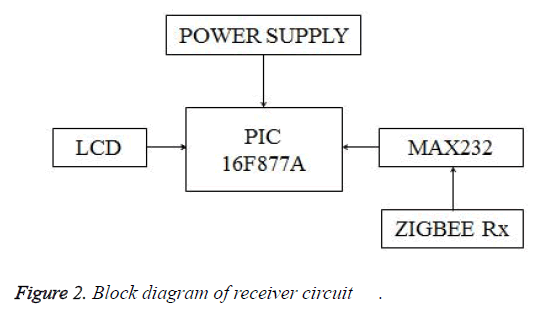

An IR-based tongue controlled assistive device which is both non-invasive and wireless has been proposed. The IR sensors are placed bilaterally on the cheek. It detects the tongue manoeuvres and converts them into commands which will be thereby displayed on the LCD. The caretaker can easily understand what the user is trying to convey and provide the required help. So this device bridges the gap between technology and the need wherein the patients can overcome their physical constraints and communicate their basic needs to the caretaker with ease and accuracy. Figures 1 and 2 show the block diagrams of the transmitter and receiver circuits.

Sensing tongue movement

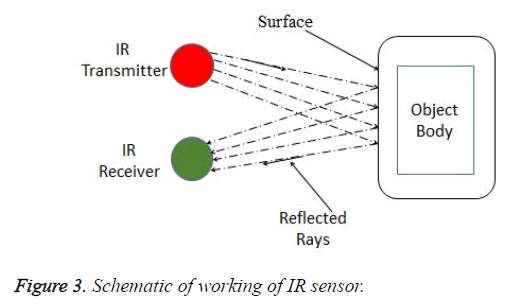

Four IR Sensors are placed on a headband, two of them placed one cm above the cheek on both sides. In this work, a normal obstacle detecting sensor is used to detect the tongue movements. When the patient touches the sensor, with the help of his tongue superficially, the sensor gets an input. This input is taken in as one command. The microcontroller collects data from the sensor and transmits it to the receiver circuit through wireless ZigBee technology. The patient can convey up to six commands, four by each sensor individually and remaining commands are obtained by combining two sensor inputs from either side. The sensor encompasses an IR transmitter and a receiver. When the tongue is in its resting position, the IR transmitter transmits IR rays and the receiver receives it after reflecting back from the cheek surface, thereby switching the sensors into its ON state. A relative output voltage is produced from all the sensors. When the transmission of IR rays between Transmitter and receiver is interrupted by the tongue, the fluctuations in the voltage cause the sensor to switch into OFF state. This change in voltage is taken as an input to the microc

ontroller which is then displayed as a command. Likewise, four commands are produced whenever a sensor gets blocked by the cheek. A regulated power supply of 5 V is given to both the circuits. Figure 3 shows the schematic of working of the IR sensor.

Hardware Details

The microcontroller used in both circuits is PIC1F877a. It is a powerful CMOS FLASH-based 8-bit microcontroller and easy to code which makes it more ideal for advanced level A/D applications in automotive, industrial, appliances and consumer applications. Obstacle detecting IR sensor which works in the near infrared region (700 nm to 1400 nm) is used to detect the changes caused due to the manoeuvring of the tongue. This sensor is widely used for wireless applications. For wireless transmission of the signal from transmitter circuit to receiver circuit, a ZigBee module is used. The commands are displayed in the LCD display module. The specifications of the components are listed in Table 1.

| Component | Specification | Voltage (V) |

|---|---|---|

| IR sensor | VEE00051 | 4.5-6 |

| Microcontroller | 16F877a | 43223 |

| Wireless- transmitter | 2.4G Zigbee CC2530 Module | 3-5.5 |

Table 1: Component specification.

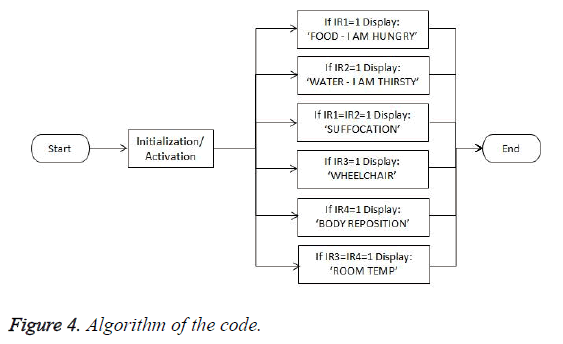

Algorithm

The algorithm of the code that executes the all the above mentioned commands is explained in this section. The code is programmed in such a way that if the input is 1, it will give an output command depending on which sensor goes into OFF state. Figure 4 explains the algorithm used in all the sensors. The activation phase begins when the patient blocks the particular sensor with their tongue according to their need. The values generated by blocking each sensor are read from the microcontroller. If the value produced is 1, it indicates that sensor is blocked and the corresponding command is sent for transmission. The command is then displayed on the LCD of the receiver.

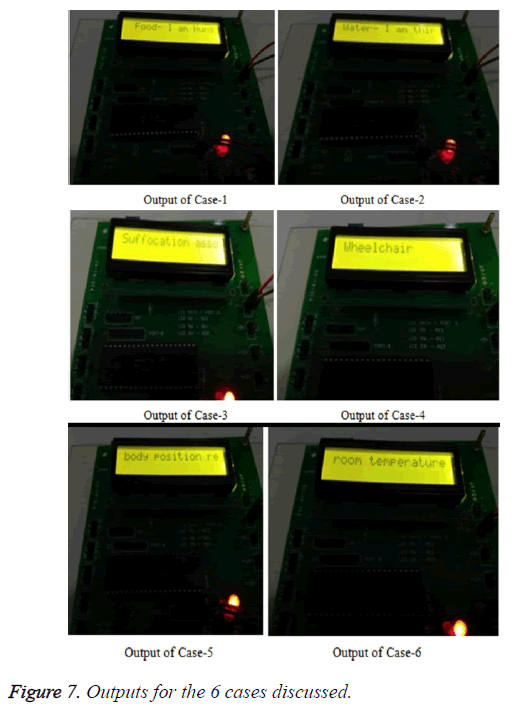

Coding involves six cases:

Case 1: IR1=1; IR2=IR3=IR4=0 Display: ‘FOOD- I AM HUNGRY’

Case 2: IR2=1; IR1=IR3=IR4=0 Display: ‘WATER- I AM THIRSTY’

Case 3: IR1=IR2=1; IR3=IR4=0 Display: ‘SUFFOCATION’

Case 4: IR3=1; IR1=IR2=IR4=0 Display: ‘WHEELCHAIR’

Case 5: IR4=1; IR1=IR2=IR3=0 Display: ‘BODY REPOSITION’

Case 6: IR3=IR4=1; IR1=IR2=0 Display: ‘ROOM TEMPERATURE’

The data from the transmitter circuit is wirelessly transmitted over a ZigBee to the receiver circuit. The commands are displayed in the LCD placed in the receiver circuit.

Experimental Results

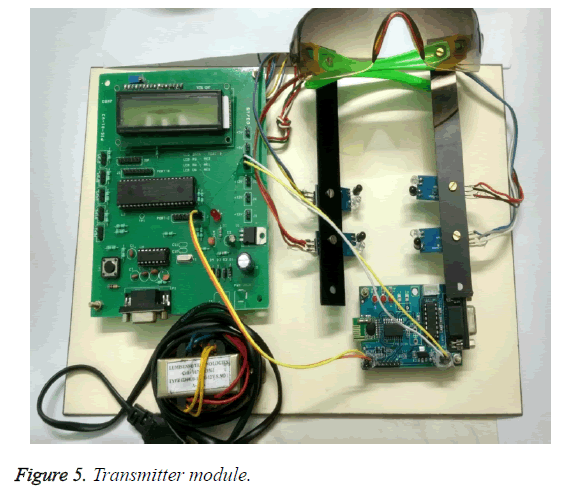

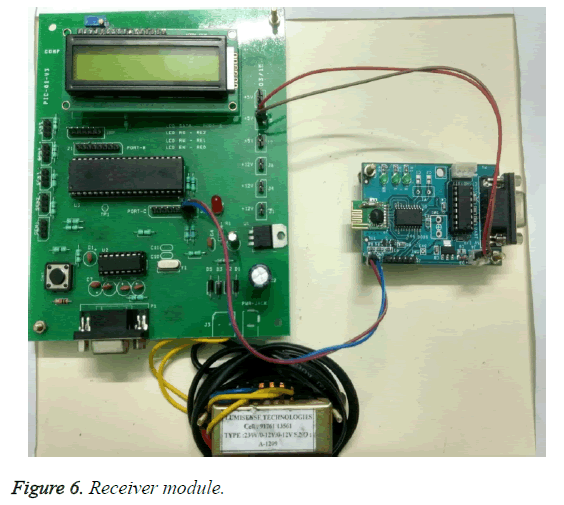

The prototype of the TCAD was developed and tested. The transmitter was able to transmit the data wirelessly through ZigBee up to 10 m range. Figures 5 and 6 show the transmitter and receiver circuits developed. When the tongue is in resting position the sensors values returned 0. The users were successful in operating the Tongue controlled Assistive Device with ease and accuracy.

The users didn’t feel any kind of physical discomfort while using the device. All the six commands were achieved successfully. These user commands were generated either by blocking the sensor one by one or two at a time. Figure 7 shows the display of each condition with activating the combinations of IR sensors.

Conclusion

Non-invasive wireless TCAD is an adaptable and effective way to successfully obtain a user’s intention by utilizing their tongue movement. It is a non-invasive, wearable and unobtrusive assistive device, which gives accuracy and simplicity of utilization to the patient contrasted. Optical IR sensors are utilized to detect the tongue movement. When the patient blocks the sensor using his tongue, the corresponding command is generated. The system worked efficiently and was able to generate six commands distinctively. Through this device the patient can convey his basic needs to the caretaker easily. Through extensive programming and a timer, more commands can be generated.

References

- Unnikrishnan Menon KA, Revathy J, Divya P. Wearable wireless tongue controlled assistive device using optical sensors. Conference on Wireless and Optical Communications Networks (WOCN) 2013; 1-5.

- Lotte NS, Andreasen S. An inductive tongue computer interface for control of computers and assistive devices. IEEE Transaction on Biomedical Engineering 2006; 53: 2594-2597.

- Krishnamurthy G, Ghovanloo M. Tongue drive: A tongue operated magnetic sensor based wireless assistive technology for people with severe disabilities. IEEE International Symposium on Circuits and Systems 2006; 5551-5554.

- Xueliang H. Evaluation of the tongue drive system by individuals with high-level spinal cord injury. Eng Med Biol Soc 2009; 555-558.

- Xueliang H, Jia W, Maysam G. A magneto-inductive sensor based wireless tongue computer interface. IEEE Transactions on Neural Systems and Rehabilitation Engineering 2008; 16: 497-504.

- Jeonghee K, Hangue P, Joy B, Diane R, Jaimee H, Beatrice N. Assessment of the tongue-drive system using a computer, a smartphone, and a powered-wheelchair by people with tetraplegia. IEEE Transactions on Neural Systems and Rehabilitation Engineering 2016; 24: 68-78.

- Hangue P, Jeonghee K, Maysam G. “Intraoral tongue drive system demonstration”. IEEE Biomedical Circuits and Systems Conference (BioCAS) 2012; 81.

- Mir MT, Rahat K, Ashoke KSG. Assistive Technology for Physically Challenged or Paralyzed Person Using Voluntary Tongue Movement. 5th International Conference on Informatics, Electronics and Vision (ICIEV) 2016; 293-296.

- Xueliang H. Tongue drive: a wireless tongue- operated means for people with severe disabilities to communicate their intentions. IEEE Communications Magazine 2012; 50: 128-135.

- Elnaz Banan S. “Command detection and classification in tongue drive assistive technology”. Eng Med Biol Soc (EMBC) 2011; 5465-5468.

- Behnaz Y. Preliminary assessment of tongue drive system in medium term usage for computer access and wheelchair control. 33rd Annual International Conference of the IEEE EMBS Boston, Massachusetts, USA 2011; 5766-5769.

- Sardini E. Analysis of tongue pressure sensor for biomedical applications. IEEE International Symposium on Medical Measurements and Applications (MeMeA) 2014; 1-5.